As data continues to grow exponentially, businesses and individuals alike face the challenge of maintaining high-performance servers. For those keen on maximizing their server performance while working within a budgetary constraint, every decision, big or small, can be crucial.

From the intricacies of hardware selection to the nuances of code deployment, this comprehensive guide endeavors to walk you through pivotal areas, enabling you to ensure that your server operates at its absolute peak without causing a financial strain.

Hardware Selection, Server Location, and Cooling

A successful server’s foundation is built upon its hardware. Making informed decisions in this domain can lead to substantial savings in both time and money over time. While deciding, it’s important to prioritize a balance between the CPU’s core number and its processing speed, ensuring it aligns well with your server’s designated purpose.

Contrary to some beliefs that see RAM as a mere supplementary component, investing in faster and more advanced versions like DDR4 or its successors can provide a dramatic performance enhancement.

The excellence of hardware components can be undermined by an inadequate environment. The geographical placement of your server plays a crucial role, especially concerning temperature regulation.

Opting for data centers situated in cooler climates or considering an on-premises setup gives you more authority over the cooling mechanisms. Implementing efficient cooling solutions not only extends your server’s lifespan but also considerably diminishes energy expenditure.

Workload Prioritization, Monitoring, and Analytics

In the intricate ecosystem of a server, tasks have varying degrees of importance. It’s crucial to prioritize workloads in alignment with their significance and the demand they command.

Establishing a system for regular monitoring, coupled with the integration of robust analytics tools, facilitates the identification of potential bottlenecks and sections that might be resource-hungry. Such proactive adjustments ensure that the servers such as Australia dedicated servers maintain smooth operations, even during demanding peak periods.

Resource Requirements and Management

Gaining a thorough understanding of your operational requirements is pivotal in streamlining resource allocation. Tools like Docker have revolutionized this space by allowing applications to be isolated, guaranteeing that they receive the requisite resources without unnecessary over-provisioning.

Furthermore, embracing automation across as many processes as feasible not only ensures a more streamlined management approach but also minimizes the chances of manual errors, which can be costly in terms of both time and money.

Server Virtualization and Energy Consumption Reduction

The advent of virtualization has indeed rewritten many server management rules. This transformative technology lets you partition a single physical server into multiple virtual entities, thus facilitating optimal resource utilization and slashing operational costs.

In this realm, tools like VMware or Hyper-V emerge as powerful allies. In tandem, making deliberate efforts to adopt green computing principles, such as leveraging energy-efficient hardware and software, can remarkably trim down your energy expenses.

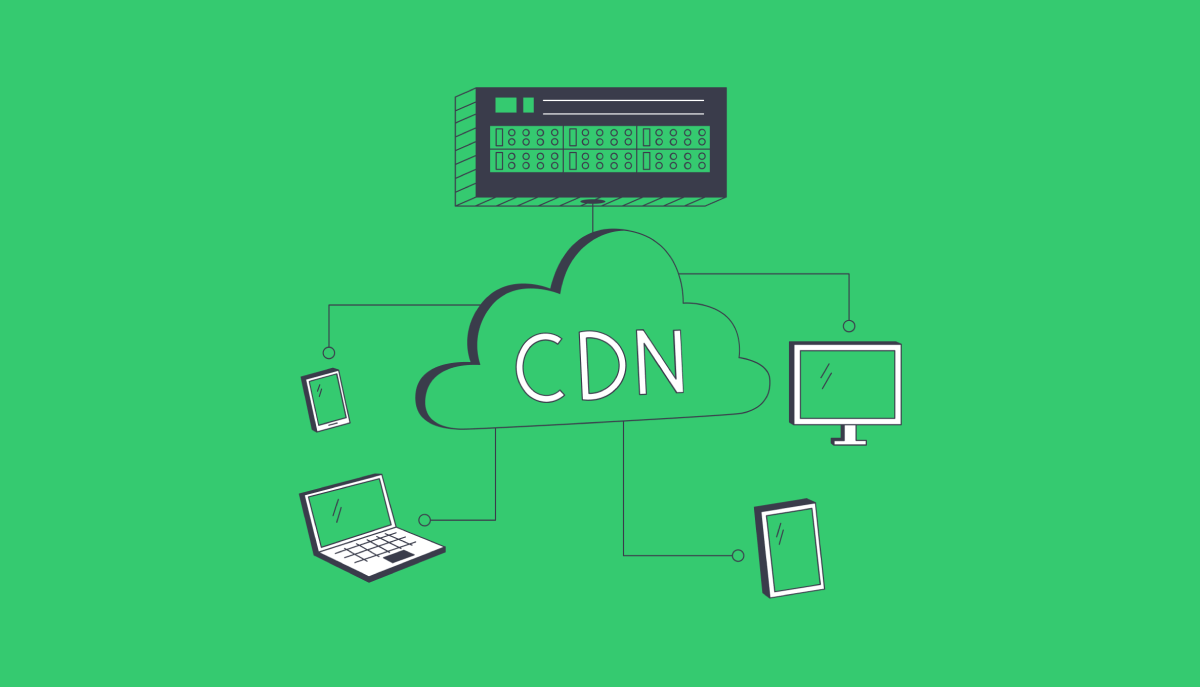

VPS, CDNs, and Caching Mechanisms

In the quest for efficiency without excessive expenditure, Virtual Private Servers (VPS) emerge as a beacon, offering dedicated resources without the hefty price tag of a full-blown server.

When it comes to content dissemination, Content Delivery Networks (CDN) act as a force multiplier, broadcasting your content to a global audience and subsequently lightening the load on your primary server. To enhance this, it’s beneficial to put in place robust caching mechanisms.

These systems store frequently accessed data, eliminating the recurring need to retrieve it, resulting in noticeably swifter response times.

Operating System, Database Optimization

Your choice of operating system can be a defining factor in server performance. Linux, as an example, is versatile, offering versions that are meticulously tailored for server functions.

On the database front, it’s not just about having a system in place but ensuring regular maintenance, strategic indexing, and electing the right database system. These steps are instrumental in averting potential slowdowns and guaranteeing fluid data access and retrieval.

Minimalistic Setup, Content Management, and Optimization

In many server scenarios, embracing minimalism can be the key. A stripped-down, minimalistic server setup can significantly decrease potential points of malfunction while enhancing overall performance.

In tandem, the integration of efficient content management systems, like WordPress fortified with optimization plugins, can significantly amplify server response times, translating to a more refined user experience.

Resource Scaling, Security, and Collaboration Tools

With the growth in traffic and demands, the server’s ability to dynamically scale becomes indispensable. Implementing adaptive auto-scaling solutions can aptly address these varying demands.

On the security frontier, the importance of regular software updates, fortified firewalls, and other protective measures can’t be overstated. Moreover, incorporating collaboration tools not only boosts team productivity but also ensures that the server’s integrity remains uncompromised.

Load Testing, CDN Integration, and Compression

To keep your server in top form, instituting regular load testing can provide invaluable insights, spotlighting potential performance choke points before they become critical issues.

Complementing this with CDN integration can further alleviate stress on your server. Concurrently, the adoption of compression methods, like gzip, can drastically reduce the volume of data your server needs to transmit, culminating in accelerated load times.

Workload Distribution, Database Management, and Scalability

Optimizing server performance necessitates an equitable distribution of its workload across multiple machines or services. Such strategic dispersion not only curtails the risk of a single point of system failure but also augments overall efficiency.

Ensuring regular database backups, coupled with advanced techniques like sharding and replication, fortifies data integrity and sets the stage for scalable growth.

Virtualization Techniques, Downtime Prevention, and Cloud Services

While traditional virtualization has its merits, modern solutions like containerization, exemplified by tools such as Kubernetes, offer a new horizon. When coupled with rigorous downtime prevention measures like consistent backups and failover mechanisms, the system becomes more resilient.

Additionally, harnessing the potential of cloud services provides an added layer of redundancy, offering an expansive pool of resources.

Efficient Code Deployment, Performance Monitoring, and Resource Consolidation

At the heart of it all, your server’s effectiveness hinges on the code it runs. Employing Continuous Integration/Continuous Deployment (CI/CD) pipelines promises a seamless deployment process.

Instituting a regime of frequent performance monitoring allows for the timely identification and rectification of potential issues. And, wherever feasible, consolidating resources can substantially cut overheads, pushing the efficiency quotient even higher.

In summary, to truly harness the potential of a dedicated server’s performance within a budget, one must craft a symphony of hardware selections, software decisions, and strategic planning.

By diligently implementing the best practices elucidated above, not only will your server be a beacon of efficiency, but its cost-effectiveness over the long haul will be a testament to your foresight.